Few big companies are banning the use ChatGPT and other AI tools like Bard and Bing. Is this a good decision, or are they missing out on the opportunity brought by these smart tools? Besides, are there really bad consequences for using ChatGPT at work? Before a month or two, not everybody knew about AI tools, but since the arrival of ChatGPT, the field has now taken off.

If you are still not familiar with it, ChatGPT is an advanced language model developed by OpenAI. It generates writing that matches human-generated content. Its powers include query answering, language translation, narrative and poem generation, summarization, coding assistance, and various other uses.

The reasons behind the ban on ChatGPT

The reasons why big companies banned their employees from using ChatGPT all revolve around misleading or incorrect information, data leakage & confidentiality, and the fear of human labor replacement. Employees accidentally uploading some sensitive code and information on the platform could lead to data breaches and data sharing with external users.

Potential Risks

Since ChatGPT is trained on a large amount of data, the quality and accuracy of the data influence its responses. If the training data contains mistakes or missing information, the model’s output may reflect such constraints. ChatGPT does not have the ability to independently validate the accuracy of the information contained in its training data, which can result in the development of wrong or misleading responses. Therefore, companies that rely on ChatGPT to generate new content or code face a higher risk of coming across errors or inaccuracies. When using artificial intelligence platforms like Google Bard and Bing, a few big companies have raised concerns over data stored on external servers.

As ChatGPT learns from the input it receives, it may mistakenly disclose sensitive information. This could include trade secrets, confidential data, or any other sensitive information. Another risk of using ChatGPT in the workplace is the replacement of human labor. It is especially at the job related to tech and other administrative work. It is said so because the tool can create code and can perform related data entry and content creation.

- You may also like:

Few companies to ban AI tools with reasons

Samsung

As stated by Samsung, this storage system offers issues in terms of data retrieval, deletion, and potential disclosure to unintended recipients. Samsung has urged its employees who use ChatGPT and similar programs on personal devices to avoid sharing any company-related or personal data that may expose its intellectual property. According to the company, failure to comply with these new standards may result in firing.

Apple

Apple also has introduced this step to reduce the accidental release of confidential data as part of its efforts to safeguard sensitive information. This attempt adds to Apple’s concurrent goal of building in-house technology with similar capabilities. This means that we are gonna have too many AI tools in the future.

| Company | Reason to Ban AI Tools |

| Apple | Confidential data leaks. |

| Samsung | Accidental leaks of secret information, including internal code and meeting recordings. |

| Calix | Risk of exposure of sensitive information like confidential internal memos or customer contracts under NDA to outsiders. |

| Citigroup | Due to restrictions that are imposed around third-party software. |

| Deutsche Bank | To protect the bank from data leakage. |

| Goldman Sachs | Due to restrictions that are imposed around third-party software. |

| JPMorgan Chase | To avoid potential regulatory pitfalls over sharing sensitive financial information with a third-party platform. |

| Verizon | Concern about privacy and security with ChatGPT. |

While using AI tools, some companies only limit employees from providing essential company information and not exactly ban the tools.

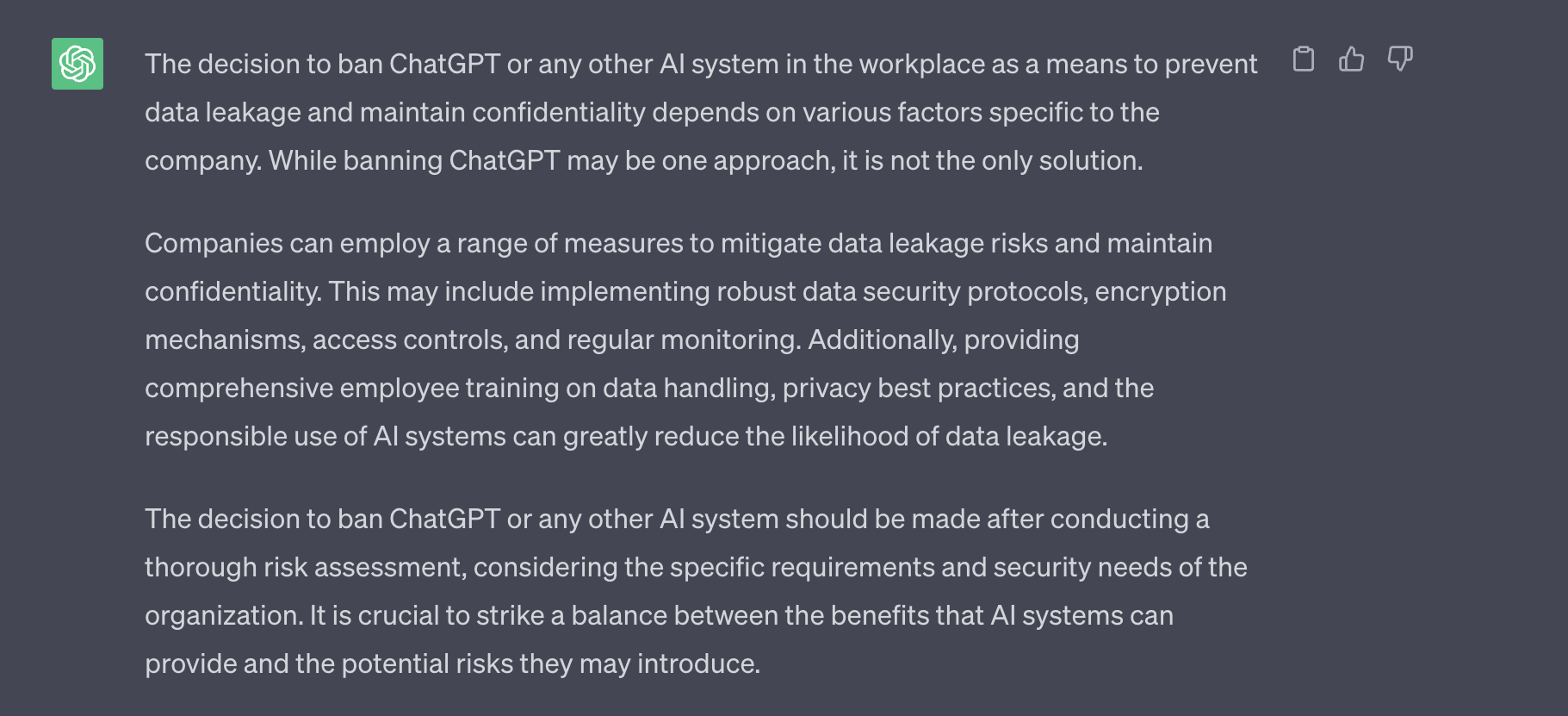

Does ChatGPT think the workplace should ban it?

We asked ChatGPT about the exact issues — “Is it necessary for a company to ban ChatGPT at the workplace to prevent data leakage and confidentiality?” The following response is from the AI tool itself.

On the other hand, according to AI consultants like Rocío Bachmaier, banning AI tools in the workplace will end up hurting the company itself.

Successfully implementing ChatGPT can significantly impact your organization’s productivity, growth and innovation.

From this, we can conclude that, without serious assessment, banning ChatGPT is a rushed move motivated by fear rather than thoughtful reasoning. When evaluating the value of proven new technology, it is critical to face risks head-on and limits any potential negative results rather than ignoring the potential advantages.

In order to help employees navigate AI, companies can educate them, establish clear norms and policies, promote a culture of accountability, and prioritize ethical considerations. It is essential to exercise precaution not only while using ChatGPT but also while using every other technology that deals with sensitive data. Managing security risks becomes essential, and one option is to create policies to help employees handle these risks.

- Meanwhile, check out our review of the Samsung Galaxy A24.

![Best Gaming Laptops in Nepal Under Rs. 250,000 (रु 2.5 Lakhs) [2025] Best Gaming Laptops Under 2.5 lakhs in Nepal [Feb 2025 Update]](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/02/Best-Gaming-Laptops-Under-2.5-lakhs-in-Nepal-Feb-2025-Update.jpg)

![Best Gaming Laptops in Nepal Under Rs. 120,000 (रु 1.2 Lakhs) [2025] Best Budget Gaming Laptops Under Rs 120000 in Nepal 2025 Update](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/05/Best-Budget-Gaming-Laptops-Under-Rs-120000-in-Nepal-2024-Update.jpg)

![Best Laptops Under Rs. 80,000 in Nepal [2025] Best Laptops Under 80,000 in Nepal March 2025 Update](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/03/Best-Laptops-Under-80000-in-Nepal-March-2025-Update.jpg)

![Best Gaming Laptops in Nepal Under Rs. 200,000 (रु 2 Lakhs) [2025] Best gaming lapotp under 2 lakhs Nepal Feb 2025](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/01/Best-Gaming-Laptops-Under-2-Lakh-Nepal-Feb-2025-Update.jpg)

![Best Mobile Phones Under Rs. 15,000 in Nepal [Updated 2025] Best Phones Under 15000 in Nepal 2024 Budget Smartphones Cheap Affordable](https://cdn.gadgetbytenepal.com/wp-content/uploads/2024/03/Best-Phones-Under-15000-in-Nepal-2024.jpg)

![Best Mobile Phones Under Rs. 20,000 in Nepal [Updated] Best Mobile Phones Under NPR 20000 in Nepal 2023 Updated Samsung Xiaomi Redmi POCO Realme Narzo Benco](https://cdn.gadgetbytenepal.com/wp-content/uploads/2024/01/Best-Phones-Under-20000-in-Nepal-2024.jpg)

![Best Mobile Phones Under Rs. 30,000 in Nepal [Updated 2025] Best Phones Under 30000 in Nepal](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/01/Best-Phones-Under-30000-in-Nepal.jpg)

![Best Mobile Phones Under Rs. 40,000 in Nepal [Updated 2025] Best Phones Under 40000 in Nepal 2024 Smartphones Mobile Midrange](https://cdn.gadgetbytenepal.com/wp-content/uploads/2024/02/Best-Phones-Under-40000-in-Nepal-2024.jpg)

![Best Mobile Phones Under Rs. 50,000 in Nepal [Updated 2025] Best Phones Under 50000 in Nepal](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/01/Best-Phones-Under-50000-in-Nepal.jpg)

![Best Flagship Smartphones To Buy In Nepal [Updated] Best flagship phone 2025](https://cdn.gadgetbytenepal.com/wp-content/uploads/2024/07/Best-Flagship-Phones-who-is-it-ft-1.jpg)