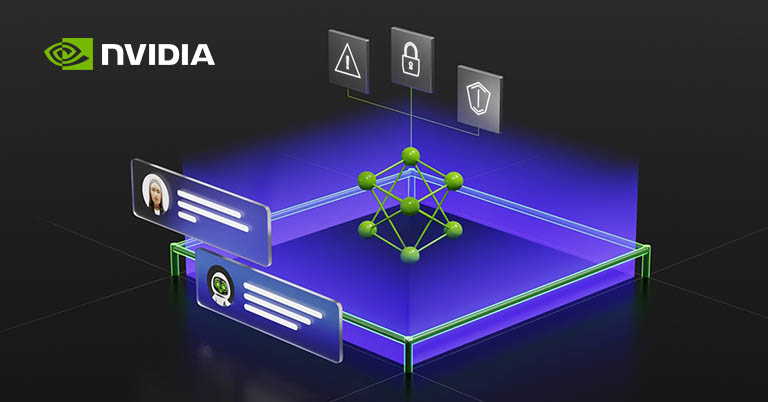

NVIDIA just released an open-source toolkit called “NeMo Guardrails” to help generative AI programs like ChatGPT stay on track and answer queries securely. NVIDIA’s Senior Director for AI Software, Jonathan Cohen, says this is a move to make text-generating AI tools and chatbots safer and non-toxic.

NVIDIA NeMo Guardrails and AI:

According to the company, this “AI safety toolkit” has easy-to-implement features that anyone can use to guide their chatbots to act professionally. Since Large Language Models (LLMs) are prone to bias and “hallucinations”, they aren’t always ideal. Sometimes they might even become toxic or manipulative.

As a result, the need for a mechanism that can guide chat interactions as intended has been emerging in this space for quite some time.

Companies like OpenAI use multiple layers of filters and human moderators to prevent unintended responses. But smaller organizations might not be able to do the same. It’s getting increasingly easier to create an LLM that is trained on your very own dataset and answers queries on that domain.

So the safety concern may not be the top priority—or rather out of technical reach—for small businesses. They might also choose to implement their own safety protocols that are either too broad and vague or too uptight and narrow for their use case. Hence, an open-source tool like NVIDIA NeMo Guardrails would make it accessible to everyone.

- Also Read:

- Is AI getting too dangerous? The “Godfather of AI” definitely thinks so!

- TikTok will soon let you create AI-generated profile pictures

- Microsoft is working on a secret AI chip to power ChatGPT

- Microsoft Bing and Edge get even more capable with DALL-E image creator

What is NeMo Guardrails?

Basically, NeMo Guardrails contains code constraint examples and documentation using which developers can easily guide their chatbots to behave in the intended manner. “These guardrails monitor, affect, and dictate a user’s interactions, like guardrails on a highway that define the width of a road and keep vehicles from veering off into unwanted territory”, says NVIDIA.

What can NeMo Guardrails do?

When implemented, it can have noticeable impacts on the user experience with text-based AI tools. As of now, NeMo Guardrails support these 3 categories of boundaries:

- Topical guardrails, to prevent the bot from veering out of topic and going rogue.

- Safety guardrails, to prevent misinformation, toxic responses, and biases.

- Security guardrails, to prevent chatbots from executing malicious code and scripts.

NVIDIA NeMo Guardrails: Conclusion

NVIDIA says they have been working on NeMo Guardrails for years and acknowledge that its model isn’t perfect. That being said, this is definitely a step in the right direction.

A lot of businesses are looking to capitalize on the superhuman capabilities of text-generating chatbots and LLMs. This enables the need for a lot of these businesses to implement their bots safely. Hence, NVIDIA’s move toward AI safety is a huge stepping stone for the future.

- Meanwhile, check out our Xiaomi 13 Lite review:

![Best Gaming Laptops in Nepal Under Rs. 250,000 (रु 2.5 Lakhs) [2025] Best Gaming Laptops Under 2.5 lakhs in Nepal [Feb 2025 Update]](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/02/Best-Gaming-Laptops-Under-2.5-lakhs-in-Nepal-Feb-2025-Update.jpg)

![Best Gaming Laptops in Nepal Under Rs. 120,000 (रु 1.2 Lakhs) [2025] Best Budget Gaming Laptops Under Rs 120000 in Nepal 2025 Update](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/05/Best-Budget-Gaming-Laptops-Under-Rs-120000-in-Nepal-2024-Update.jpg)

![Best Laptops Under Rs. 80,000 in Nepal [2025] Best Laptops Under 80,000 in Nepal March 2025 Update](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/03/Best-Laptops-Under-80000-in-Nepal-March-2025-Update.jpg)

![Best Gaming Laptops in Nepal Under Rs. 200,000 (रु 2 Lakhs) [2025] Best gaming lapotp under 2 lakhs Nepal Feb 2025](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/01/Best-Gaming-Laptops-Under-2-Lakh-Nepal-Feb-2025-Update.jpg)

![Best Mobile Phones Under Rs. 15,000 in Nepal [Updated 2025] Best Phones Under 15000 in Nepal 2024 Budget Smartphones Cheap Affordable](https://cdn.gadgetbytenepal.com/wp-content/uploads/2024/03/Best-Phones-Under-15000-in-Nepal-2024.jpg)

![Best Mobile Phones Under Rs. 20,000 in Nepal [Updated] Best Mobile Phones Under NPR 20000 in Nepal 2023 Updated Samsung Xiaomi Redmi POCO Realme Narzo Benco](https://cdn.gadgetbytenepal.com/wp-content/uploads/2024/01/Best-Phones-Under-20000-in-Nepal-2024.jpg)

![Best Mobile Phones Under Rs. 30,000 in Nepal [Updated 2025] Best Phones Under 30000 in Nepal](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/01/Best-Phones-Under-30000-in-Nepal.jpg)

![Best Mobile Phones Under Rs. 40,000 in Nepal [Updated 2025] Best Phones Under 40000 in Nepal 2024 Smartphones Mobile Midrange](https://cdn.gadgetbytenepal.com/wp-content/uploads/2024/02/Best-Phones-Under-40000-in-Nepal-2024.jpg)

![Best Mobile Phones Under Rs. 50,000 in Nepal [Updated 2025] Best Phones Under 50000 in Nepal](https://cdn.gadgetbytenepal.com/wp-content/uploads/2025/01/Best-Phones-Under-50000-in-Nepal.jpg)

![Best Flagship Smartphones To Buy In Nepal [Updated] Best flagship phone 2025](https://cdn.gadgetbytenepal.com/wp-content/uploads/2024/07/Best-Flagship-Phones-who-is-it-ft-1.jpg)